Thoughts on Go and Memory Management

Memory management. Wars have been fought over it — mostly virtual ones.

Lifetimes, ownership, manual vs. GC… it’s a rabbit hole.

Take Go, for example. It has a tri-color mark-sweep garbage collector, and it’s good enough for a lot of workloads. It features low-latency pauses (in the low milliseconds), concurrent sweeping, and optimizations like stack scanning and write barriers to minimize overhead.

But, lots of “good enough” doesn’t mean “perfect.” In fact, in high performance or latency-sensitive applications — like gaming or financial systems — Go’s garbage collector can become a bottleneck.

In these cases, developers often have to resort to other languages, like Rust or C++, to get the control they need over memory management, leading to more complex codebases and increased cognitive load.

Rust is amazing, but lifetimes and ownership can be a pain in the …neck. You spend most of your time convincing the compiler that your code is safe, not writing the code itself, and the rest of the time you spend figuring out which memory model to use for which part of your system.

In another corner of the arena, languages like C++ offer, well, everything. Manual memory management gives you total control, but at the cost of complexity and potential for bugs like memory leaks and dangling pointers. You can only know true pain if you have ever had to debug a segmentation fault.

So, yes, Go’s simplicity is a feature. It lets teams move fast, focus on business logic, and not drown in segfaults. But like all features, it comes with trade-offs.

Let’s focus on Go’s memory model and see:

What Works Well

- Developer speed: Go’s GC reduces cognitive load. Most devs never think about memory and still ship reliable services.

- Predictability at scale: For many backends, the GC overhead is acceptable. Latency might bump, but the system stays up.

- Ecosystem consistency: There’s no fragmentation of memory models. The language enforces “one way,” which reduces chaos.

Where It Hurts

- Latency-sensitive workloads: Even with improvements, GC pauses creep in. If you’re writing trading systems or low-latency games, you feel it.

- Hidden costs: It’s easy to write code that looks fine but allocates heavily. Without profiling, you won’t see the leaks until it’s too late.

- Limited control: You can’t opt into RAII-like patterns or linear types. Go’s escape analysis helps, but it’s opaque and not always predictable.

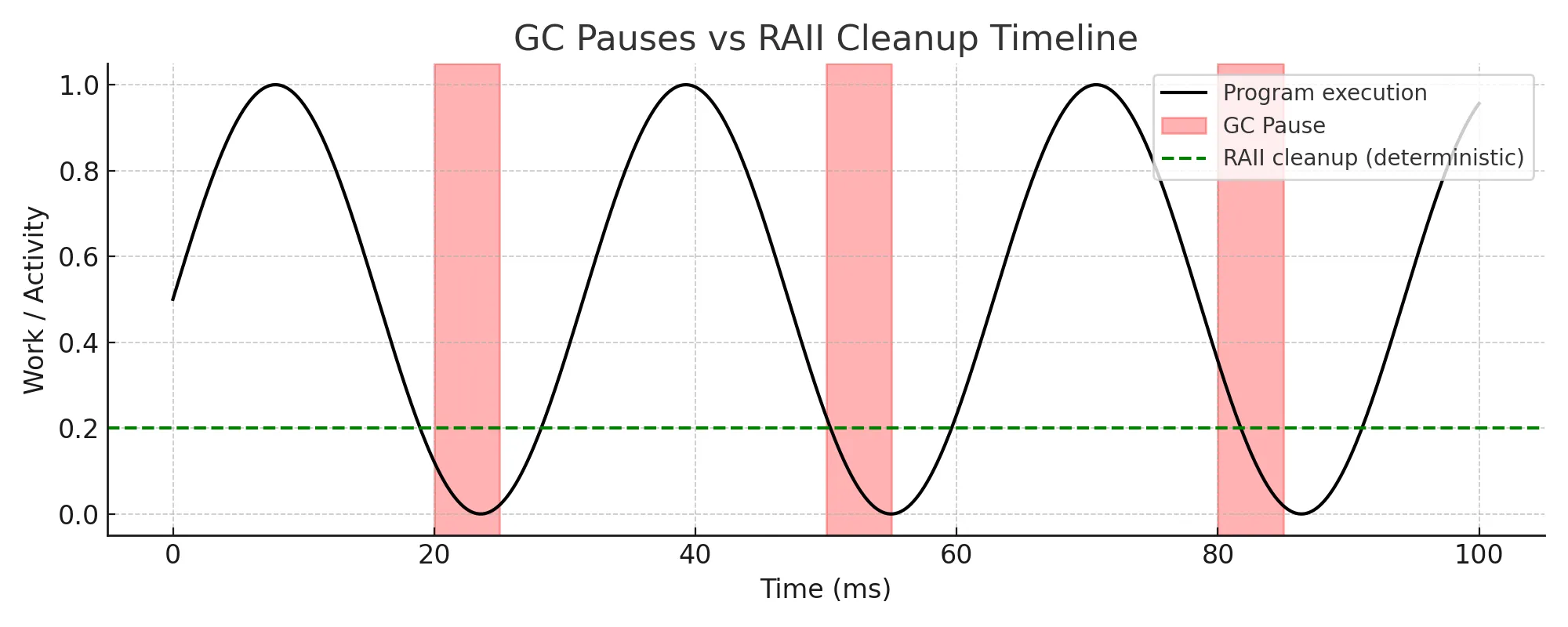

Visualizing GC Pauses vs. RAII

Figure: In Go, objects allocated on the heap depend on the garbage collector for cleanup, leading to periodic pauses. In RAII (as in C++), resources tied to scope are cleaned up deterministically as they go out of scope.

Examples

Memory Allocation

Take this code for example:

func processItems(items []Item) {

for _, item := range items {

temp := createTempObject(item) // createTempObject will `escape` to heap

useTempObject(temp)

} // temp goes out of scope here, but memory is still on the heap

}Now, let’s look at the C equivalent:

void processItems(Item* items, size_t count) {

for (size_t i = 0; i < count; i++) {

TempObject temp = createTempObject(items[i]);

useTempObject(temp);

} // temp goes out of scope here, no heap allocation

}In Go’s version, createTempObject allocates memory on the heap, which the garbage collector will eventually clean up. However, if items is a large slice, this can lead to significant memory pressure and GC overhead, causing latency spikes that are hard to predict.

In the C version, createTempObject can allocate temp on the stack, which is automatically cleaned up when it goes out of scope. This gives you tighter control and often lower latency, but at the cost of manual memory management with all its pitfalls.

HTTP Handlers

Now, let’s consider a simple HTTP handler in Go:

package main

import (

"log"

"net/http"

)

func main() {

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

data := make([]byte, 1024*1024) // Allocate 1MB

w.Write(data)

})

log.Fatal(http.ListenAndServe(":8080", nil))

}In contrast, a C++ version might look like this:

#include <iostream>

#include <cstring>

#include <sys/socket.h>

#include <netinet/in.h>

#include <unistd.h>

void handleClient(int clientSocket) {

char* data = new char[1024 * 1024]; // Allocate 1MB

send(clientSocket, data, 1024 * 1024, 0);

delete[] data; // Clean up

}

int main() {

int serverSocket = socket(AF_INET, SOCK_STREAM, 0);

sockaddr_in serverAddr;

serverAddr.sin_family = AF_INET;

serverAddr.sin_addr.s_addr = INADDR_ANY;

serverAddr.sin_port = htons(8080);

bind(serverSocket, (struct sockaddr*)&serverAddr, sizeof(serverAddr));

listen(serverSocket, 5);

while (true) {

int clientSocket = accept(serverSocket, nullptr, nullptr);

handleClient(clientSocket);

close(clientSocket);

}

close(serverSocket);

return 0;

}Looking at the Go snippet, every time a request hits the server, it allocates 1MB of memory. The garbage collector will eventually clean this up, but with rapid requests, GC pressure mounts and performance can dip.

In the C++ example, you have to manage memory manually. This can be faster, but forgetting delete[] leaves you with leaks and brittle code.

The trade-off is clear: you get simplicity and speed of development at the cost of some performance and control. Or, you gain control and potential performance but at the cost of complexity and increased risk of bugs.

Resource Management

Another example is when you want to manage resources like file handles or network connections. In Go, you typically use defer to ensure cleanup, but this doesn’t tie the resource’s lifetime to the scope in a way that RAII does in C++.

func readFile(path string) ([]byte, error) {

file, err := os.Open(path)

if err != nil {

return nil, err

}

defer file.Close() // file will be closed when the function returns

data, err := ioutil.ReadAll(file)

if err != nil {

return nil, err

}

return data, nil

}In C++, you can use RAII to manage the lifetime of resources more tightly:

#include <fstream>

#include <vector>

#include <string>

std::vector<char> readFile(const std::string& path) {

std::ifstream file(path, std::ios::binary);

if (!file) {

throw std::runtime_error("Could not open file");

}

std::vector<char> data((std::istreambuf_iterator<char>(file)), std::istreambuf_iterator<char>());

return data; // file is automatically closed when it goes out of scope

}This pattern minimizes the chance of leaks while keeping code concise.

So?

Up to now, I’ve shared with you some code snippets and examples to illustrate the trade-offs between Go’s garbage collection and other memory management strategies like RAII in C++, but you might be thinking, “So what? Everyone knows this.” And you’d be right.

My point is, can we do better? Can we have the best of both worlds?

Can we have a language that offers the simplicity and speed of Go, but also gives us the control and predictability of RAII or manual memory management when we need it?

What Could Be Next

I’m sure there are ways Go could evolve its memory model to offer more flexibility without sacrificing its core simplicity.

But to be honest, I wonder if the future lies in languages that can seamlessly blend multiple memory management strategies. One of the most promising directions here comes from linear types, a type system approach that enforces single ownership of values, offering deterministic cleanup without the need for a garbage collector.

Imagine a language that lets you choose between GC, RAII, and manual memory management on a per-module or even per-function basis.

You could write high-level business logic with GC for speed and safety, while performance-critical sections could use RAII or manual memory management for fine-tuned control…

What if we could choose the right tool for the job without switching languages or dealing with complex interop?

What if we could stick to one language that we love, but use it in different ways depending on the task at hand?

Closing

This isn’t a complaint about Go. I use it daily, and it’s brilliant at what it set out to do. But memory management is never “solved.” It’s a moving target, shaped by hardware, workloads, and ideas from other languages.

So maybe the real question isn’t “Is Go’s GC good enough?” but “What would a next-generation Go look like if it embraced multiple memory models — and perhaps even borrowed ideas like linear types to tighten control over resources?”

That’s the kind of question I want to keep asking here.

In the upcoming posts, I’ll explore some ideas and prototypes around this theme. Starting with linear types.

Until next time.